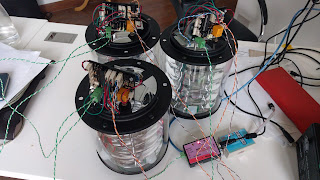

For this test, I have 3 light posts transmitting short 10byte packets as fast as they possibly can given the constraints of the protocol. These constraints basically reduce to:

- You can’t send a packet when someone else is sending a packet

- After someone else finishes, you have to wait at least 1ms to be sure they are done

- After the 1ms wait, you must wait a random amount between 0 and 1ms to reduce chance of starting at the same time as someone else

packetTime = 13.957secs

numPackets = 3525, numSkips = 434, numBadChecksum = 118

skip% = 12.31%, badChksum% = 3.35%

src=1, numSrcPackets=1072, 30.41% of all packets

src=2, numSrcPackets=1054, 29.90% of all packets

src=3, numSrcPackets=1281, 36.34% of all packets

total num device packets received = 3407

total num packets per second = 244.10

effective baudrate = 24410.32

baudrate efficiency vs 38400 line baudrate = 63.57%

numPackets = 3265, numSkips = 0, numBadChecksum = 0

skip% = 0.00%, badChksum% = 0.00%

src=1, numSrcPackets=3265, 100.00% of all packets

total num device packets received = 3265

total num packets per second = 243.42

effective baudrate = 24342.47

baudrate efficiency vs 38400 line baudrate = 63.39%

You can see that despite not having any collisions, the average packet rate goes down (very slightly). This is because the average delay when one device is on the bus is 1.5ms while the average delay when 3 devices are on the bus goes down to 1.25ms (because the minimum of 3 random waits wins). As counter-intuitive as it may be, it appears that 3 devices sending packets as fast as they can and losing 12% of them actually get slightly more through than 1 device sending alone on the bus where none of its packets get lost.

Worth contemplating this a bit to get your mind around that one.

The 63% efficiency I’m seeing compares quite well against the 18.4% of Pure ALOHA, and the 36.8% of Slotted ALOHA. Furthermore, tightening up the 1ms wait plus up to 1ms more in random wait could push the efficiency up even further. But my conclusion is that for my purposes, the 243 packets per second is more than enough. (I don’t expect more than a few packets per second under normal circumstances.) And if I ever did need more bandwidth, upping the baudrate would seem to be the easiest way to do this.

But for now, this test was very successful, and I believe I have a functioning peer-to-peer RS485 protocol to proceed with on the “smart lights” firmware.